Fifteen years ago, social media was becoming popular. Facebook was growing fast, and Twitter had just launched. Ten years ago, banks were cautious about such media as it might compromise customer information. Five years ago, many were starting to get the idea, but were still trying to work out how to be social and compliant. Today, they’ve got it, with most have twitter help profiles and facebook pages.

It's always the way when you have new technologies. Banks are worried whether they fit with compliance and regulations; customers are wondering why they took so long to get there.

You could say the same about app and now we have chatbots but, going back to the above, how can you ensure the chatbot is compliant in its answers and actions with currently in force regulations?

Talking with one firm at Pay360, they explained it can be hard unless you have trained the chatbot properly, tested it properly and maintained it properly (I’ve said before that the future jobs will be all about training, explaining and maintaining AI).

Source: MIT Sloane Review

The case he cited about where it goes wrong is the Canadian Airlines chatbot, whose persuaded a customer to pay full price for tickets. The chatbot conversation started and, with enough evidence from the customer, credited their account with a refund for flights and hotel. But then, before the conversation concluded, decided that was the wrong decision and took the funds back.

The customer in question, Jake Moffatt, was a bereaved grandchild who paid more than $1600 for a return flight to and from Toronto when he only in fact needed to pay around $760 in accordance with the airline’s bereavement rates.

The chatbot told Moffatt that he could fill out a ticket refund application after purchasing the full-price tickets to claim back more than half of the cost, but this was erroneous advice. Air Canada argued that it should not be held responsible for the advice given by its chatbot, amongst other defences The small claims court disagreed, and ruled that Air Canada should compensate the customers for being misled by their chatbot.

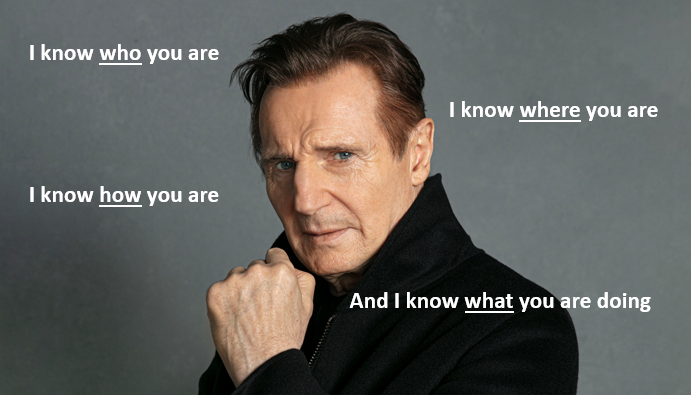

As this gets more and more development, it starts to get even more worrying. For example, I use this slide in my keynotes these days …

... you must be careful how you use this information.

In fact, as a bank and other firms - think bigtech - has so much information about a customer, they will need to use it correctly and with caution. You might say that’s fine but, under GDPR and other rules, are they treating customer information right and do they have permission to use that information, right?

It’s a subject developing every day, and I’m guessing that we will get to the stage where an AI scanner checks the AI conversations to alert an AI compliance engine to any breach of rules and regulations to AI-enabled customer services who contact the customer via the bank’s chatbot … and screws up again.

After all, that seems to be the way the human customer services operations in the back office of most banks works today.

Chris M Skinner

Chris Skinner is best known as an independent commentator on the financial markets through his blog, TheFinanser.com, as author of the bestselling book Digital Bank, and Chair of the European networking forum the Financial Services Club. He has been voted one of the most influential people in banking by The Financial Brand (as well as one of the best blogs), a FinTech Titan (Next Bank), one of the Fintech Leaders you need to follow (City AM, Deluxe and Jax Finance), as well as one of the Top 40 most influential people in financial technology by the Wall Street Journal's Financial News. To learn more click here...